AR jam demo

In this fun little project, you'll be able to activate and manipulate different audio channels, using your hand and fingers in real-time. Nice right?

Before you begin

Before getting started, set up your development environment by following the steps in the Quickstart.

It also helps to have gone through the Handtracker in shared AR experience tutorial.

Unity & package versions used in this demo

- Unity 2022.3.11f1

- Auki Labs ConjureKit Ur module v0.6.5

- AR Foundation v5.1.0

- ARKit XR Plugin v5.1.0

Configuration

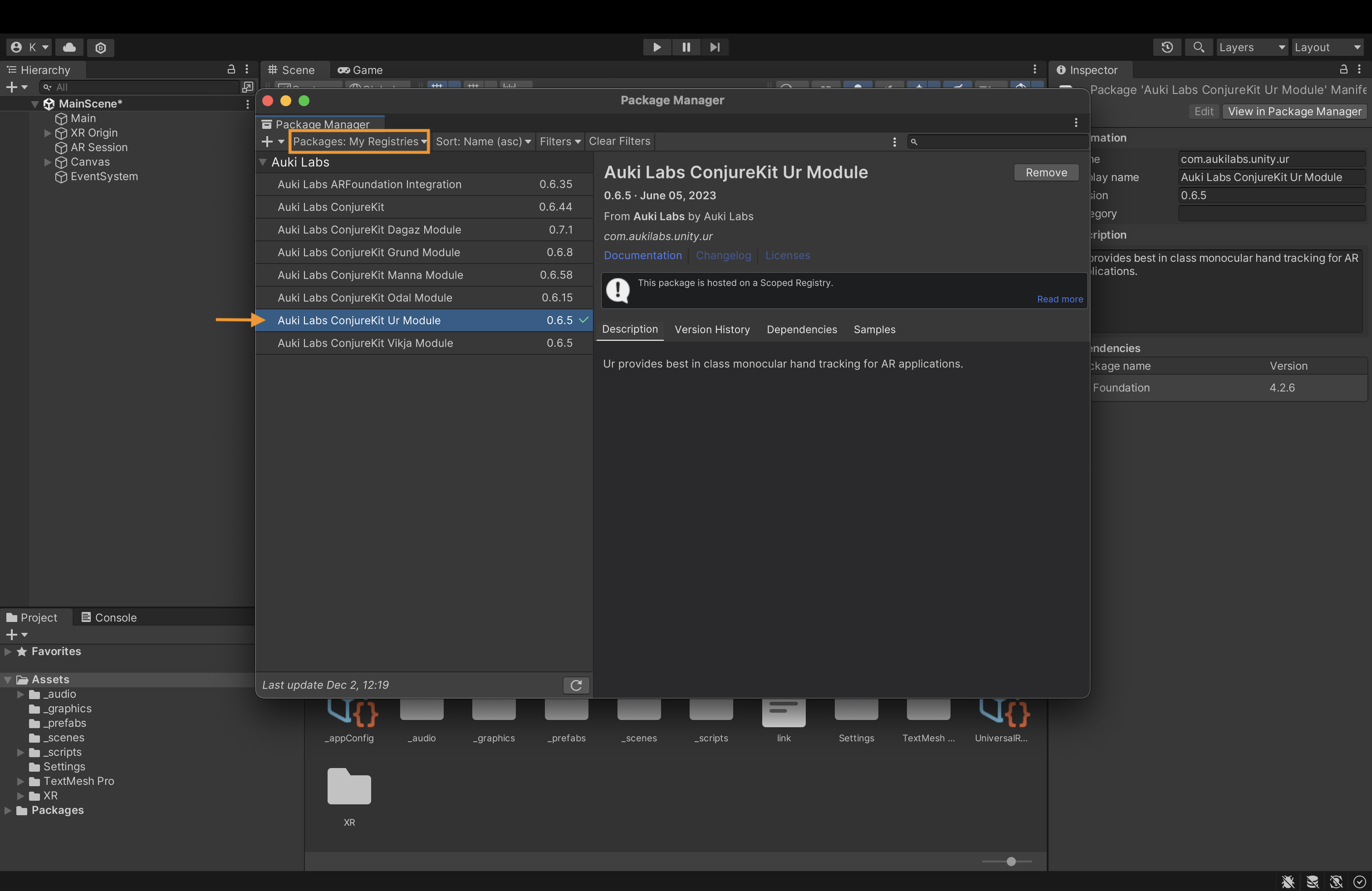

After downloading the project from GitHub, you'll notice in the package manager, under My Registries, the ConjureKit modules. For this project we’ll only be using Ur.

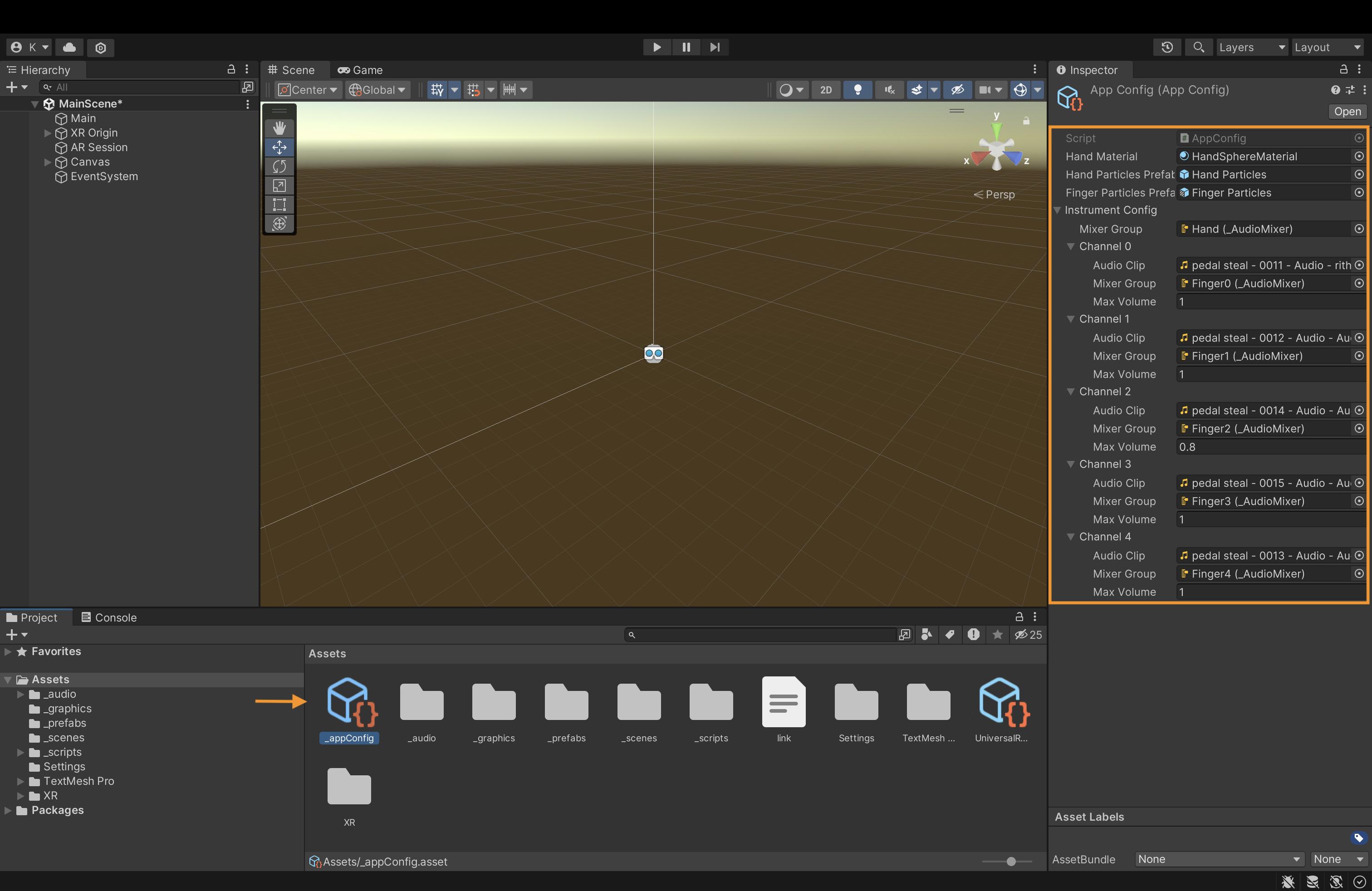

A scriptable object named AppConfig stores all the graphical and audio elements we'll be using in this project, including the hand material when tracked, particle effects, one main audio mixer for the hand, and five audio files and mixers for each finger, including a volume parameter.

Main Classes

Now, let's review the project's main classes.

AppMain.cs

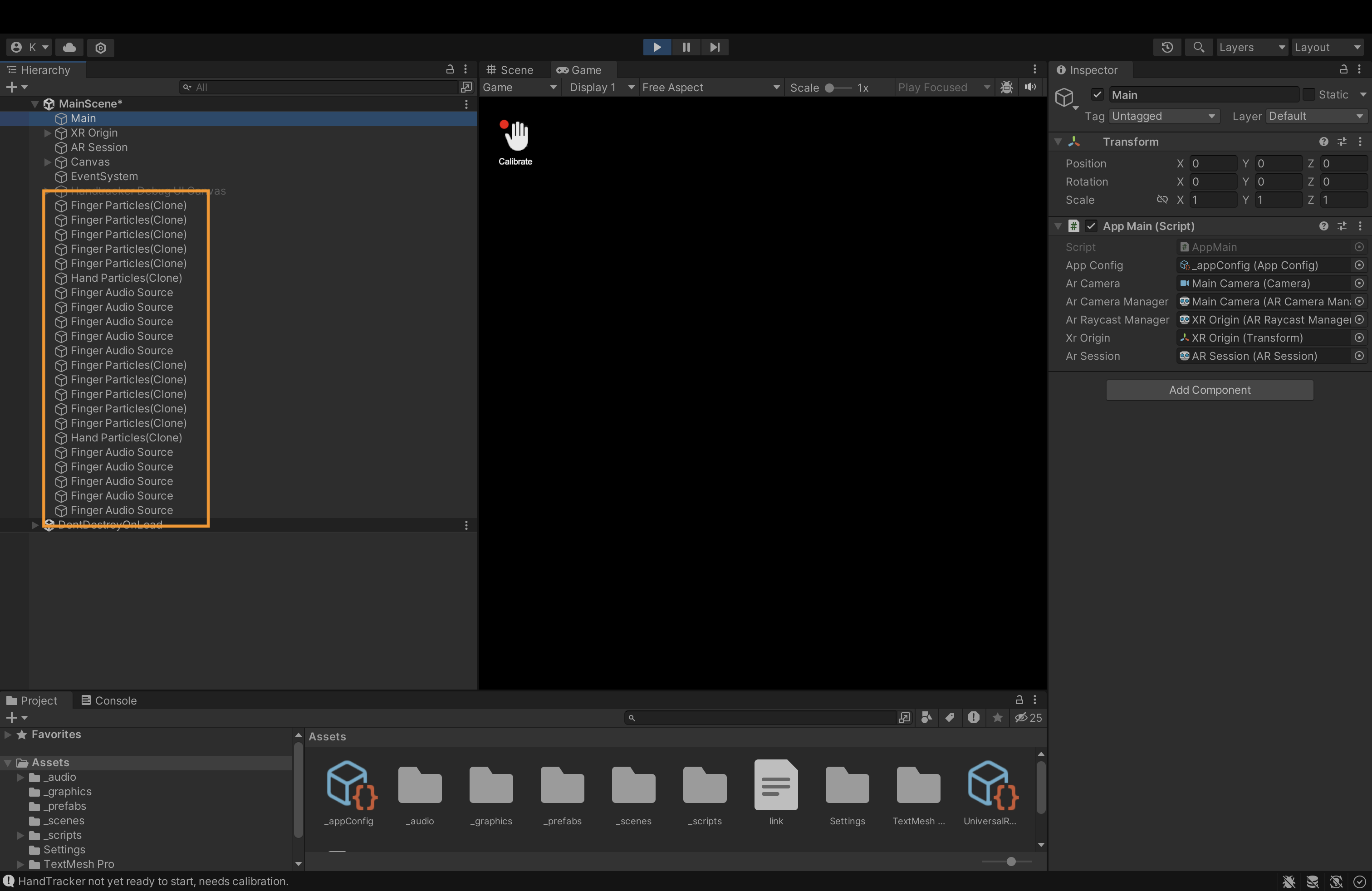

Our scene hierarchy has a Main game object with the AppMain.cs class attached to it. It stores the AppConfig and the AR Foundation assets we need for this project.

public class AppMain : MonoBehaviour {

[SerializeField] private AppConfig appConfig;

[SerializeField] private Camera arCamera;

[SerializeField] private ARCameraManager arCameraManager;

[SerializeField] private ARRaycastManager arRaycastManager;

[SerializeField] private Transform xrOrigin;

[SerializeField] private ARSession arSession;

In AppMain.cs, we also declare HandService.cs, a self-written logic that responds to the hand tracking. We initialize it in the start function and make sure it is constantly updated (inside the Update() method), together with a constant update of the Ur hand tracker.

private HandService m_HandService;

void Start()

{

m_HandService = new HandService(arSession, arCamera, arRaycastManager, appConfig.handParticlesPrefab, appConfig.fingerParticlesPrefab);

m_HandService.SetInstrumentConfig(appConfig.instrumentConfig);

StartCoroutine(StartHandTrackingWithDelay());

}

}

void Update()

{

HandTracker.GetInstance().Update();

m_HandService?.UpdateLocalInstruments();

}

HandService.cs

The HandService.cs class is responsible for spawning the hand and finger objects and constantly tracking their position and movement using Ur. The class creates two HandInstrument instances to allow the detection of two hands simultaneously. To change the number of hands we want to detect, change the MAX_HAND_COUNT value.

internal class HandService

{

private const int MAX_HAND_COUNT = 2;

private readonly Camera m_ArCamera;

private readonly GameObject m_HandParticlesPrefab;

private readonly GameObject m_FingerParticlesPrefab;

private TrackedHand[] m_Hands;

private HandInstrument[] m_HandInstruments;

public HandService(ARSession arSession, Camera arCamera, ARRaycastManager arRaycastManager,

GameObject handParticlesPrefab, GameObject fingerParticlesPrefab)

{

m_ArCamera = arCamera;

m_HandParticlesPrefab = handParticlesPrefab;

m_FingerParticlesPrefab = fingerParticlesPrefab;

HandTracker.GetInstance().SetARSystem(arSession, arCamera, arRaycastManager);

HandTracker.GetInstance().OnUpdate += OnUpdate;

m_HandInstruments = new HandInstrument[MAX_HAND_COUNT];

for (int i = 0; i < MAX_HAND_COUNT; i++)

{

m_HandInstruments[i] = new HandInstrument();

}

m_Hands = new TrackedHand[MAX_HAND_COUNT];

for (int i = 0; i < MAX_HAND_COUNT; i++)

{

m_Hands[i] = new TrackedHand();

m_Hands[i].OnHandAppear += m_HandInstruments[i].Start;

m_Hands[i].OnHandDisappear += m_HandInstruments[i].Stop;

}

}

Each HandInstrument instance represents one hand and, when initialized, will spawn five audio sources and five particle prefabs for each finger. This happens at the app's start before any hand detection begins.

In the OnUpdate() method, we get the data of all detected hands and add them to a temporary list.

private void OnUpdate(float[] landmarks, float[] translations, int[] isRightHands, float[] scores)

{

List<DetectedHand> detectedHands = new List<DetectedHand>();

int handCountInArrays = landmarks.Length / 3 / HandTracker.LandmarksCount;

for (int handIndex = 0; handIndex < handCountInArrays; handIndex++)

{

float score = scores[handIndex];

if (score < 0.3f)

{

continue;

}

detectedHands.Add(DetectedHand.FromArraysWithOffset(landmarks, translations, handIndex, m_ArCamera.transform));

}

Since we want to handle multiple hand detection, we need to write logic for which one we want to track. In the OnUpdate() method, we create a temporary TrackedHand object and mark it if it's the closest hand to the camera.

List<DetectedHand> unmatchedDetectedHands = new List<DetectedHand>(detectedHands);

List<TrackedHand> unmatchedTrackedHands = new List<TrackedHand>(m_Hands);

foreach (var detectedHand in detectedHands)

{

float minDistance = float.MaxValue;

TrackedHand closestHand = null;

foreach (var trackedHand in unmatchedTrackedHands)

{

if (trackedHand == null || detectedHand.landmarksWorld == null || trackedHand.worldLandmarks == null)

continue;

float distance = Vector3.Distance(detectedHand.landmarksWorld[0], trackedHand.worldPosition);

if (distance < minDistance)

{

minDistance = distance;

closestHand = trackedHand;

}

}

if (closestHand != null && minDistance < 0.2f)

{

unmatchedDetectedHands.Remove(detectedHand);

unmatchedTrackedHands.Remove(closestHand);

closestHand.UpdateTracking(detectedHand);

}

}

Still in the OnUpdate() method, we add logic to replace the tracked hand in real-time when the hand position changes. Finally, we activate the currently tracked hand using the HandInstrument.cs class.

var unusedHands = unmatchedTrackedHands.Where(hand => !hand.isCurrentlyTracked).ToList();

foreach (var detectedHand in unmatchedDetectedHands)

{

var trackedHand = unusedHands.FirstOrDefault();

if (trackedHand != null)

{

unusedHands.Remove(trackedHand);

unmatchedTrackedHands.Remove(trackedHand);

trackedHand.UpdateTracking(detectedHand);

}

else

{

Debug.Log("Detected more hands than the trackable hands count, or some logic is wrong.");

}

}

// Update unmatched tracked hands to not tracking.

foreach (var trackedHand in unmatchedTrackedHands)

{

if (trackedHand != null)

trackedHand.UpdateNotTracking();

}

for (int i = 0; i < MAX_HAND_COUNT; i++)

{

if (m_Hands[i] == null || m_HandInstruments[i] == null || m_Hands[i].worldLandmarks == null)

continue;

if (m_Hands[i].isCurrentlyTracked)

m_HandInstruments[i].UpdateTracking(m_Hands[i]);

}

}

HandInstrument.cs

The HandInstrument.cs class is responsible for activating the instruments and particles based on the hand and finger positions.

We use the UpdateTracking() method to get the current hand position based on the center points of a few hand landmarks, including the palm.

public void UpdateTracking(TrackedHand hand)

{

var landmarks = hand.worldLandmarks;

m_HandPosition = (landmarks[0] + landmarks[5] + landmarks[9] + landmarks[13]) / 4f; // Approximately palm center

In the UpdateInstrument() method (that is constantly updated in the HandService.cs class), we create a height parameter based on the hand position and activate the low and high pass effect based on this parameter.

public void UpdateInstrument()

{

if (isPlaying)

{

Vector3 towardsHand = m_HandPosition - m_CameraTransform.position;

float heightParam = Vector3.SignedAngle(m_CameraTransform.transform.forward, towardsHand,

m_CameraTransform.transform.right);

heightParam = Mathf.Clamp(heightParam / 40.0f, -1.0f, 1.0f) * 0.5f + 0.5f;

heightParam = Mathf.Clamp01(1 - heightParam);

m_HeightParam = Mathf.Lerp(m_HeightParam, heightParam, 0.2f);

float lowpass = 18000;

if (heightParam < 0.4f)

{

float factor = Mathf.Clamp01(0.4f - heightParam) / 0.4f;

lowpass = Mathf.Lerp(18000, 100, Mathf.Pow(factor, 0.3f));

}

m_MixerGroup.audioMixer.SetFloat(m_MixerGroup.name + "_lowpass", lowpass);

float highpass = 10;

if (heightParam > 0.6f)

{

float factor = Mathf.Clamp01(heightParam - 0.6f) / 0.4f;

highpass = Mathf.Lerp(10, 3000, factor);

}

m_MixerGroup.audioMixer.SetFloat(m_MixerGroup.name + "_highpass", highpass);

}

m_VolumeMultiplier = Mathf.Lerp(m_VolumeMultiplier, isPlaying ? 1.0f : 0.0f, 0.15f);

m_Fingers[0].UpdateInstrument(m_VolumeMultiplier);

m_Fingers[1].UpdateInstrument(m_VolumeMultiplier);

m_Fingers[2].UpdateInstrument(m_VolumeMultiplier);

m_Fingers[3].UpdateInstrument(m_VolumeMultiplier);

m_Fingers[4].UpdateInstrument(m_VolumeMultiplier);

if (m_HandParticles != null)

{

m_HandParticles.transform.position = m_HandPosition;

var emission = m_HandParticles.emission;

float intensity = 0;

foreach (var finger in m_Fingers)

{

intensity += finger.audioVolume + finger.audioImpulse * 0.5f;

}

intensity /= m_Fingers.Length;

if (intensity < 0)

intensity = 0;

var rate = emission.rateOverTime;

rate.constant = Mathf.Clamp(intensity * intensity * 15, 0, 80);

emission.rateOverTime = rate;

}

}

In the UpdateTracking() method we also feed the FingerInstrument.cs class with each finger position based on its four landmarks: its knuckle, two middle joints, and fingertip. For more information and full landmarks info please see the Ur page documentation.

public void UpdateTracking(TrackedHand hand)

{

var landmarks = hand.worldLandmarks;

m_HandPosition = (landmarks[0] + landmarks[5] + landmarks[9] + landmarks[13]) / 4f; // Approximately palm center

m_Fingers[0].UpdateTracking(landmarks[1], landmarks[2], landmarks[3], landmarks[4]);

m_Fingers[1].UpdateTracking(landmarks[5], landmarks[6], landmarks[7], landmarks[8]);

m_Fingers[2].UpdateTracking(landmarks[9], landmarks[10], landmarks[11], landmarks[12]);

m_Fingers[3].UpdateTracking(landmarks[13], landmarks[14], landmarks[15], landmarks[16]);

m_Fingers[4].UpdateTracking(landmarks[17], landmarks[18], landmarks[19], landmarks[20]);

}

FingerInstrument.cs

In the FingerInstrument.cs class, we use an UpdateTracking() method in a similar way. We determine finger bend and finger position parameters based on each finger's landmarks, and the UpdateInstrument() method, which is constantly called, will update each finger's audio volume and particle emission.

public void UpdateTracking(Vector3 knuckle, Vector3 joint1, Vector3 joint2, Vector3 tip)

{

float bend1 = Vector3.Angle(joint1 - knuckle, joint2 - joint1);

float bend2 = Vector3.Angle(joint2 - joint1, tip - joint2);

float bend = bend1 + bend2;

float dt = Time.time - m_PreviousUpdateTrackingTime;

m_PreviousUpdateTrackingTime = Time.time;

if (m_PreviousUpdateTrackingTime == 0.0f)

{

dt = 0.0f; // Avoid big change on first frame.

}

float bendDelta = bend - m_FingerBend;

if (dt > 0)

{

float bendSpeed = dt > 0 ? bendDelta / dt : 0.0f;

m_FingerBendSpeed = Mathf.Lerp(m_FingerBendSpeed, bendSpeed, 0.15f);

}

m_FingerBend = Mathf.Lerp(m_FingerBend, bend, 0.15f);

m_FingerPosition = (joint1 + joint2 + tip) / 3f;

m_PreviousUpdateTrackingTime = Time.time;

}

public void UpdateInstrument(float volumeMultiplier)

{

m_AudioSource.volume = (audioVolume * audioVolume * m_MaxVolume) * volumeMultiplier;

m_Particles.transform.position = m_FingerPosition;

var emission = m_Particles.emission;

emission.enabled = audioVolume > 0.1f;

float intensity = audioVolume + audioImpulse;

var rate = emission.rateOverTime;

rate.constant = intensity * 20f;

}

Calibration Flow

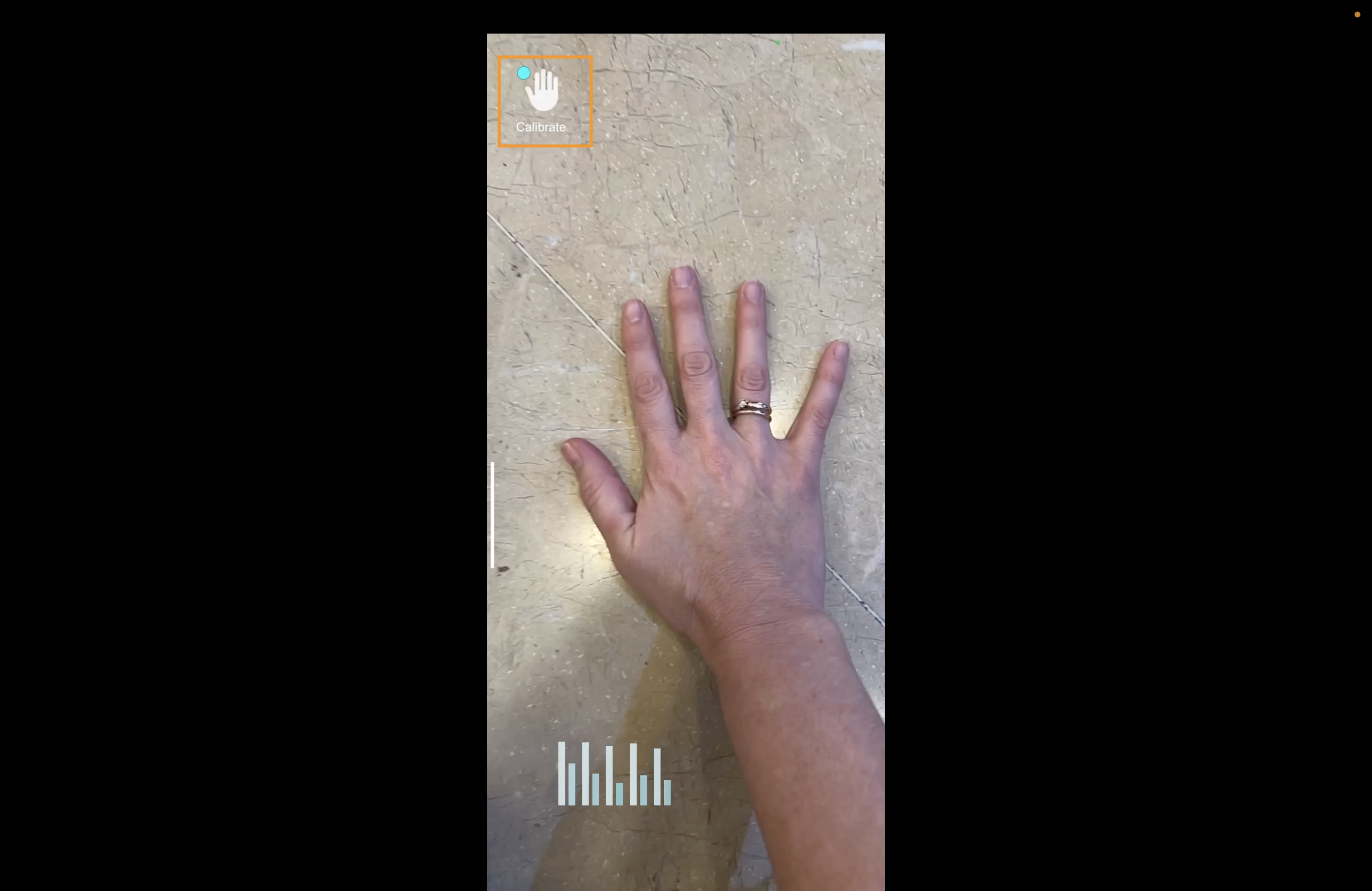

After building the scene, you'll notice a calibrate button in the top left corner of the screen. A calibration process calculates a per-user "hand scale" which is essential for accurate 3D hand pose tracking.

After clicking the button, place your hand on a flat surface, point your device's camera at your hand, and move it forward and backward. You'll notice the calibration percentage going up, and when ready, the dot next to the hand button turns green.

That's it. You are all set!

Changing the song

Oh, and one last tip: changing the song is easy. Use one of the many free AI tools to separate any song you like into different instruments and replace the channels in AppConfig.

The full Unity project can be downloaded from GitHub.